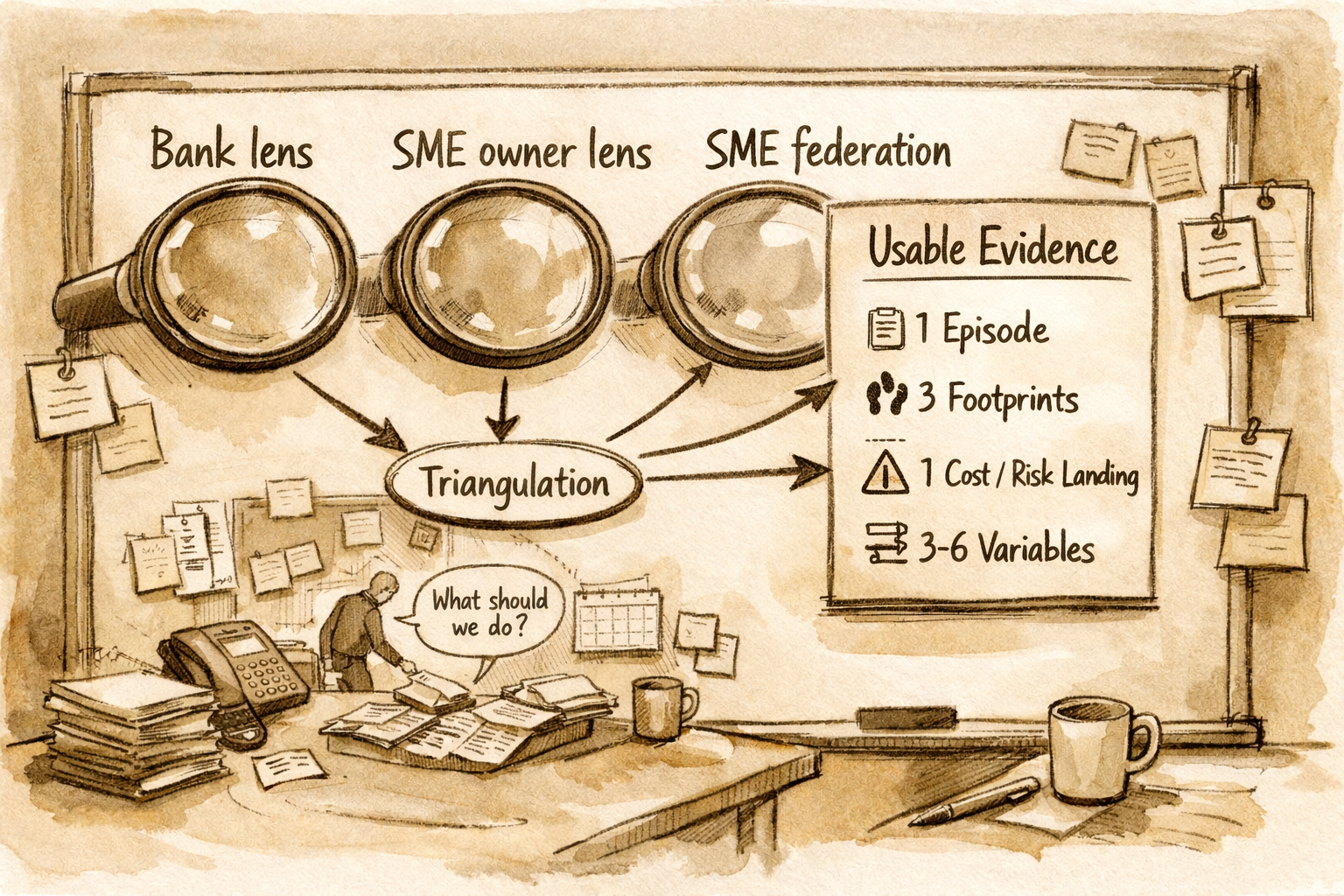

What I do rests on three field signals

-

First: low-risk SME owners tell banks, “You don’t see how we prevent risk.” Their resilience sits in everyday agreement infrastructure across their ecosystem — and it doesn’t become legible in standard credit routines.

-

Second: zooming out, the leadership of a national SME federation representing around one million firms confirmed the same pattern: roughly 70–75% operate in fragile agreement systems that increase failure risk.

-

Third: banks confirm the gap. After several conversations with senior risk and portfolio managers, a major bank invited me to submit a project proposal because they recognised: “We don’t have a defensible way to measure systemic risk.” The result is portfolios with high-risk firms that systematically overestimate themselves.

I wonder, might we have two operating systems within our economy? Most SMEs are trapped in collapse-prone agreement dynamics, while a considerable minority operates under a different agreement operating system. This validates Ritchie Dunham's (2014, 2024) work. Most interestingly, the signals suggest that our education primarily reinforces high-risk practices. My applied work, with international partners, measures how the second differs, so we can educate a generation able to run organizations that thrive rather than collapse under pressure.

Everyone sees the blind spot, I make it measurable by turning agreement quality into decision-grade credit risk evidence.

In my banking-side notes, I’ve already described the tension I observed: some SMEs feel that the way they manage risk, through everyday coordination within the firm and across customers, suppliers, and partners, does not become legible within standard credit workflows (Hinske, 2026b). This post is the counterpart. It is about what becomes visible when you sit with the support system that sees patterns across large parts of the SME landscape, and when you then treat those patterns as hypotheses that must survive contact with the field.

Note: I am keeping this fully non-attributable. I will not name the umbrella association, offices, the workshop context, participants, or locations. What I can say without compromising anonymity is that I facilitated a multi-hour workshop with all senior leaders of an EU-based umbrella federation representing roughly one million SMEs (Hinske, 2025a). The purpose was not “facilitation.” It was a disciplined research move: translating high-volume field pattern recognition into a testable measurement claim.

A pattern claim with real-world weight

The leaders in that room were asked a simple prevalence question: how often do they see collapsing coordination patterns versus flourishing ones in the firms they accompany. Their average estimate was ~70–75% “Pattern A” (reactive, friction-heavy, collapsing agreements) versus ~25–30% “Pattern B” (stable, proactive, flourishing agreements), with a range of 60/40 to 95/5 (Hinske, 2025a). They then made a second distinction that matters for my PhD: a similar split between extractive coordination and regenerative capacity-building agreements (Hinske, 2025a).

I did not treat those numbers as “statistics.” I treated them as a high-authority field hypothesis: a practice-based distribution claim that can be wrong but cannot be ignored. It is exactly the kind of claim that either turns into empty rhetoric, or becomes the starting point for building decision-grade evidence.

This is where my epistemic discipline kicks in. In CAESI, claims earn credibility through structured confrontation with practice: do practitioners recognize the distinctions, can they use them, and do the patterns hold when you change vantage points and evidence channels (Hinske, 2025a).

Why the Agreements Health Check matters here (and why it is not “soft”)

To stress-test the hypothesis within SMEs, I used the Agreements Health Check (AHC) - a tool developed at the Institute for Strategic Clarity and used as a starting point in my PhD fieldwork - as an entry signal (not as a diagnosis) because it captures lived coordination as a yes/no orientation to what is workable or unworkable, enabling or constraining (Hinske, 2025d). The hinge is intersubjective coverage: a single response is not “wrong,” but it is not shared reality; role-diverse participation turns the signal into an initial approximation of how the system is experienced across levels and functions (Hinske, 2025d).

That mattered in the follow-up workshops because it exposed a recurring asymmetry I now treat as structurally important: resilient firms often do not feel seen in formal risk routines, while fragile firms often overestimate their agreement quality. The overestimation pattern is not a moral critique; it is a measurement problem that hides fragility behind confident self-descriptions (Hinske, 2025b; Hinske, 2025a). In other words, the gap is not only “banks don’t understand SMEs.” The gap also runs through the SME landscape itself because many systems lack reliable internal mechanisms for calibrating self-image against enacted agreements (Hinske, 2025b).

If you want the method behind this move, how I avoid turning this into storytelling, I laid out the evidence discipline in “Survey ≠ Diagnosis” (Hinske, 2025d) and the 35-minute protocol I use to turn one real episode into inspectable evidence bundles (Hinske, 2026a).

What the umbrella-system workshop added that my bank work cannot add

Bank-side evidence is strong on financial legibility. The umbrella system is strong on coordination legibility, not as opinion, but as accumulated exposure to failure modes, recovery paths, and recurring structural blockers across regions (Hinske, 2025a). That is why the workshop did not converge toward more narrative. It converged toward a design requirement: a measurement approach that can show whether advisory support changes coordination reality over time, in a way that leadership can use.

That workshop logic translated into a practical measurement design: a small number of regions, a bounded number of firms per region, a baseline measurement followed by a second readout after a defined interval, and a time window that is short enough to keep attention, yet long enough to detect meaningful change (Hinske, 2025c). The purpose is to design a repeatable instrument that produces comparable impact signals without creating administrative theatre (Hinske, 2025c).

This is also where the bridge to banking becomes concrete. If banks need defensible, repeatable signals that can survive scrutiny, then the support system needs measurement outputs that do not collapse into feel-good stories either. The overlap is the missing layer I named in the banking note: the system lacks a reliable way to translate agreement quality into evidence that can travel across cases and institutions without drifting into narrative or fake precision (Hinske, 2026b). I use conservative translation on purpose: when I connect agreement quality to risk language, I treat numbers as hypotheses with explicit uncertainty bounds, not as “precision theatre” (Hinske, 2025e).

We keep calling it “SME risk,” but we’re often measuring the wrong thing. The blind spot is agreement quality: the hidden coordination patterns that determine whether a firm absorbs shocks or drifts into fragility. This video explains the gap, and why making agreement signals legible can improve early warning without fake precision.

Script & research: Christoph Hinske; AI narration/visuals: Google NotebookLM (Video Overview); Final edit & publication: Christoph Hinske

The PhD context: what I’m actually doing here

This post reflects the core move of my PhD: building a shared evidence language for SME flourishing that does not belong to any one institutional lens (banking, advisory, or the firm itself), but rather “belongs” to the ecosystem. CAESI provides the methoditerate bet, ween cases and patterns, validate through multiple perspectives and data signals, and keep the inquiry action-engaged without sliding into intervention theatre (Hinske, 2025a). My epistemological grounding is simple: I treat knowledge claims as provisional structures that must remain falsifiable through disciplined confrontation with field reality, not as narratives that become “true” through repetition (Hinske, 2025a). The methodological rationale is equally simple: I sat with umbrella-system leaders to develop a high-authority hypothesis, tested it in the field with SMEs and banking conversations, and now pursue the gap as an applied PhD problem, an engaged-scholar commitment to make coordination legible without collapsing inquiry into consultancy (Hinske, 2025b; Hinske, 2026b).

Ecosystem-wide flourishing is the content lens: SMEs do not flourish in isolation; they flourish when agreements across their stakeholder ecosystem become visible, negotiable, and capable of learning, so that coordination improves, friction drops, and risk is not silently pushed downstream until it becomes financial damage (Hinske, 2025b). In that sense, “support” and “finance” are not separate worlds. They are two lenses on the same agreement infrastructure, and the work ahead is to build sturdy measurement bridges so they can stop talking past each other.

References

Hinske, C. (2025a). Agreements as a lever: Testing assumptions with national business leaders [Guest post]. Reflections of a Pactoecographer. https://jimritchiedunham.wordpress.com/2025/10/20/agreements-as-a-lever-testing-assumptions-with-national-business-leaders/

Hinske, C. (2025b). Exploring agreements for ecosystem-wide flourishing [Guest post]. Reflections of a Pactoecographer. https://jimritchiedunham.wordpress.com/2025/09/01/exploring-agreements-for-ecosystem-wide-flourishing/

Hinske, C. (2025c). Umbrella Organization impact measurement: Conversation groundwork (version 1) [Unpublished internal working paper].

Hinske, C. (2025c). Umbrella Organization impact measurement: Conversation groundwork (version 2) [Unpublished internal working paper].

Hinske, C. (2025d). Survey ≠ diagnosis: Using a valid signal without overclaiming it. 360Dialogues. https://360dialogues.com/phd-notes/survey-diagnosis-using-a-valid-signal-without-overclaiming-it

Hinske, C. (2025e). From agreement quality to financial risk: Conservative translation without fake precision. 360Dialogues. https://360dialogues.com/phd-notes/from-agreement-quality-to-financial-risk-conservative-translation-without-fake-precision

Hinske, C. (2026a). A 35-minute interview that produces evidence. 360Dialogues. https://360dialogues.com/phd-notes/a-35-minute-interview-that-produces-evidence

Hinske, C. (2026b). Different banking portfolios, one gap: Turning “agreement footprints” into usable evidence. 360Dialogues. https://360dialogues.com/phd-notes/two-banking-portfolios-one-gap-turning-agreement-footprints-into-usable-evidence

Ritchie-Dunham, J. L. (2014). Ecosynomics: The science of abundance. Vibrancy Publishing.

Ritchie-Dunham, J. L. (2024). Agreements: Your choice. Vibrancy Ins, LLC.