A 35-Minute Interview That Produces Evidence

Most people can talk about “impact” for hours. But when you ask for one concrete episode, observable traces, and where costs and risks land, the conversation often turns vague.

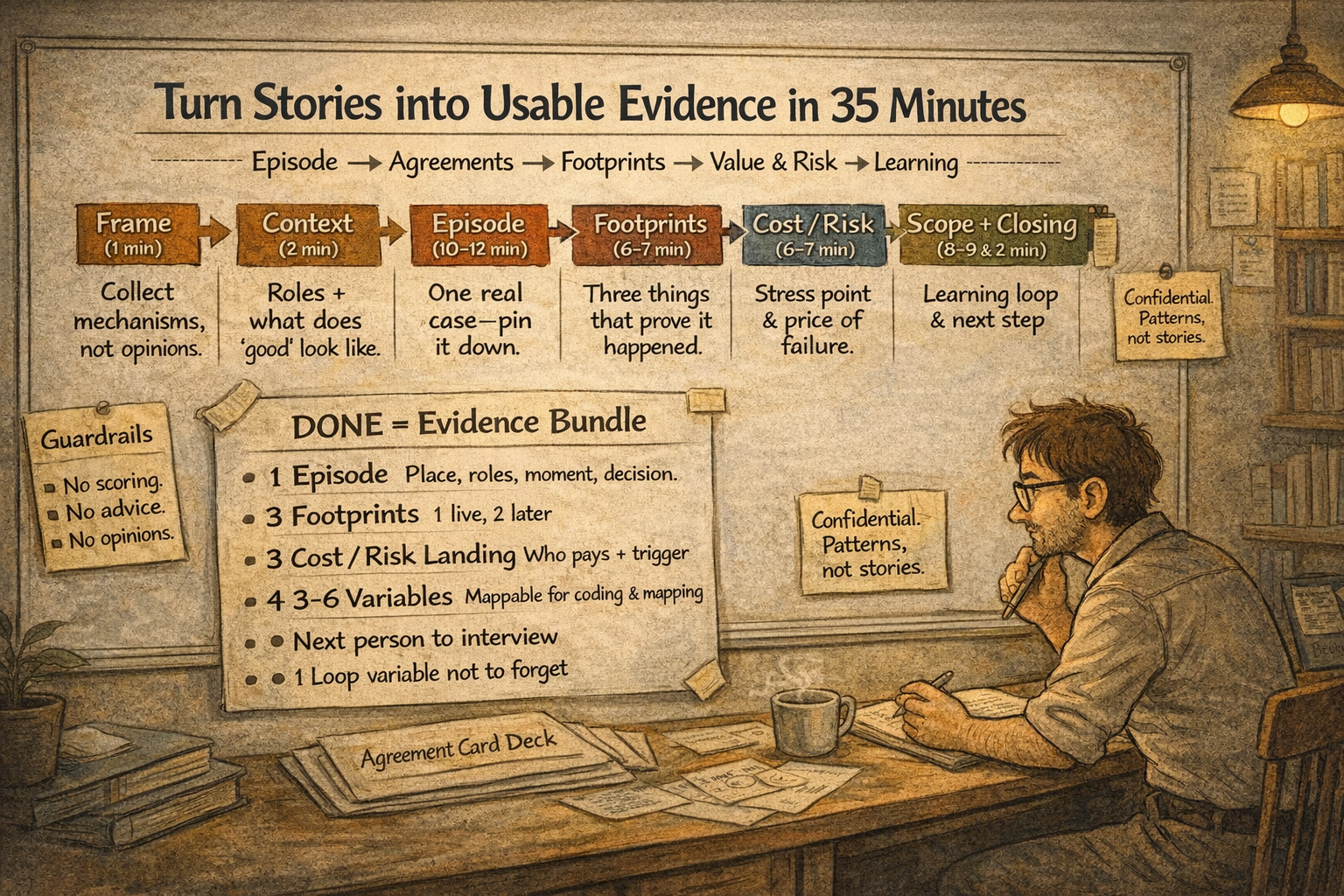

So I built a short online interview protocol that reliably yields usable evidence in under 35 minutes — without turning the interview into a survey, a diagnosis, or a consulting session.

This protocol is designed for my CAESI workflow: case-informed, action-engaged systems inquiry. The interview serves as the case-level intake: it produces material that can later be mapped (qualitative system dynamics models), coded (patterns), and tested (small next steps) in dialogue with stakeholders.

Confidentiality: how I write about fieldwork

In my PhD Notes, I do not publish identifiable field details—no names, no traceable quotes, no operational specifics that would expose partners. I write patterns, not stories — and I keep examples abstract and anonymized.

(Transparency: I co-develop structures like this with AI as a thinking partner; the interviewing, interpretation, and accountability remain mine. See my AI Disclosure Statement for more details.)

A note on the Agreement Card Deck — present, but not “on the table”

I also use my Agreement Card Deck as a quiet backbone for this interview.

Important nuance: the interview is not driven by the cards. I do not “pull cards” in front of participants, and I am not steering them toward pre-selected answers. The deck functions like an internal quality checklist, reminding me of the agreement dimensions that typically shape coordination and impact (decision rights, boundaries, bottlenecks, early indicators, etc.).

In other words, the conversation stays natural and case-specific. The deck helps me stay systematically curious — and later helps me code what I heard into comparable patterns.

That is the CAESI move: stay close to lived episodes, then abstract carefully.

The 35-minute online interview protocol

Goal per call: one episode + three footprints + cost/risk landing — plus material for relationships, regional value creation, and learning loops.

(0) Frame (60–90 seconds)

“I am not collecting opinions. I am collecting mechanisms: episode → agreements → footprints → value & risk → learning loop. No scoring, no advice.”

This keeps the conversation out of PR mode and into observable reality.

(1) Context (2 minutes)

Role & field of view

"What is your role in this ecosystem, and which part of the system do you see best?”Purpose in one sentence

"In 12 months: what would make you say this was a good year?”

(Behind the scenes, this anchors the “purpose” lens from agreement-based work — without turning it into a values interview.)

(2) One concrete episode (10–12 minutes)

Choose the episode

"Pick one concrete situation from the last few months where the [insert case specifics] work created a real effect.”Harden it (mandatory fields)

"Where was it? Who was involved (roles/organizations)? What was the critical moment or decision? What happened after?”Agreement lens

"What (often implicit) agreement made this possible — or almost killed it?”Rescue prompt (when answers get vague):

"Say it like a police report: place – roles – moment – decision – consequence.”

(This is where CAESI earns its keep: it stays close to an actual case moment.)

(3) Footprints (6–7 minutes)

Collect three traces (non-negotiable)

"What are three things I could see that prove this episode happened?”

(Examples: run sheet, invitation, photo, message thread, calendar chain, partner list, ticketing/attendance log, brief, evaluation note.)One now, two later

"Can you show one trace right now (screen/file name) — and send the other two afterwards?”

(This turns the episode from a claim into something verifiable.)

(4) Cost/Risk landing (6–7 minutes)

Landing

"When it does not work: where does the price land — time, stress, reputation, money, drop-offs — and for whom exactly?”Risk shift pattern

"Who typically catches the fall when something breaks, what triggers it, and what is an early warning sign?”

This is often the missing layer in public impact stories: the system produces value and redistributes cost.

(5) The promised scope (8–9 minutes)

This section ensures the interview stays aligned with what I committed to deliver: impact across relationships, regional value creation, and learning loops.

Relationships

"Which relationship became stronger or newly formed — and how do you see that in behavior?”Regional value creation

"What tangible outcomes emerged (social/cultural/economic) — for whom?”Learning loop (embedded, not ‘we learned a lot’)

"What do you do provably differently than six months ago: routine, rule, format, handoff, decision path?”

(6) Closing (2 minutes)

Coverage

"Who would likely describe this episode differently (another role/partner) and should I talk to next?”Loop anchor (20 seconds)

"If I draw a loop from this: X↑ → Y↑ → … what is one variable I must not forget?”

Three micro-probes (only when needed)

These are optional, 20–40 seconds each. They exist because online interviews with “medium answers” tend to blur exactly these points.

Decision rights: “Who decides what here — and where do decisions get stuck?”

Handoffs / gray zones: “Where is the handoff point that causes friction: ‘not us’ / ‘they should’?”

Bottleneck / shared resource: “Which resource is scarce or contested — and where is the bottleneck?”

Again, I do not need to announce any framework. I use these probes only to clarify what the episode already implies.

My “done” criteria per interview

I end the call only if I have:

One episode (place, roles, critical moment, decision, consequence)

Three footprints (one shown/named live, two to be shared)

Cost/risk landing (who pays, trigger, early warning sign)

Three to six variable candidates for later causal mapping and pattern coding

If I do not have those, the interview did not fail — my questions did.

Why does this stays CAESI-clean

CAESI is not about forcing reality into a framework. It is about staying close to lived moments, collecting evidence, and then abstracting responsibly into maps and patterns that can be tested and refined with stakeholders.

That is why the Agreement Card Deck sits in the background: it supports consistency and comparability without becoming a script that people feel they are being pushed through.

The field stays in the lead. The method follows.